Discord data breach brings governance principles to the forefront in real time

This week I gave my presentation on the 12 major risks of poor social media governance at 3M’s Media Technology & Governance Summit. And as luck would have it, Discord provided us a real-time example that highlighted what can happen if some of those risks are ignored.

So, let’s break it down.

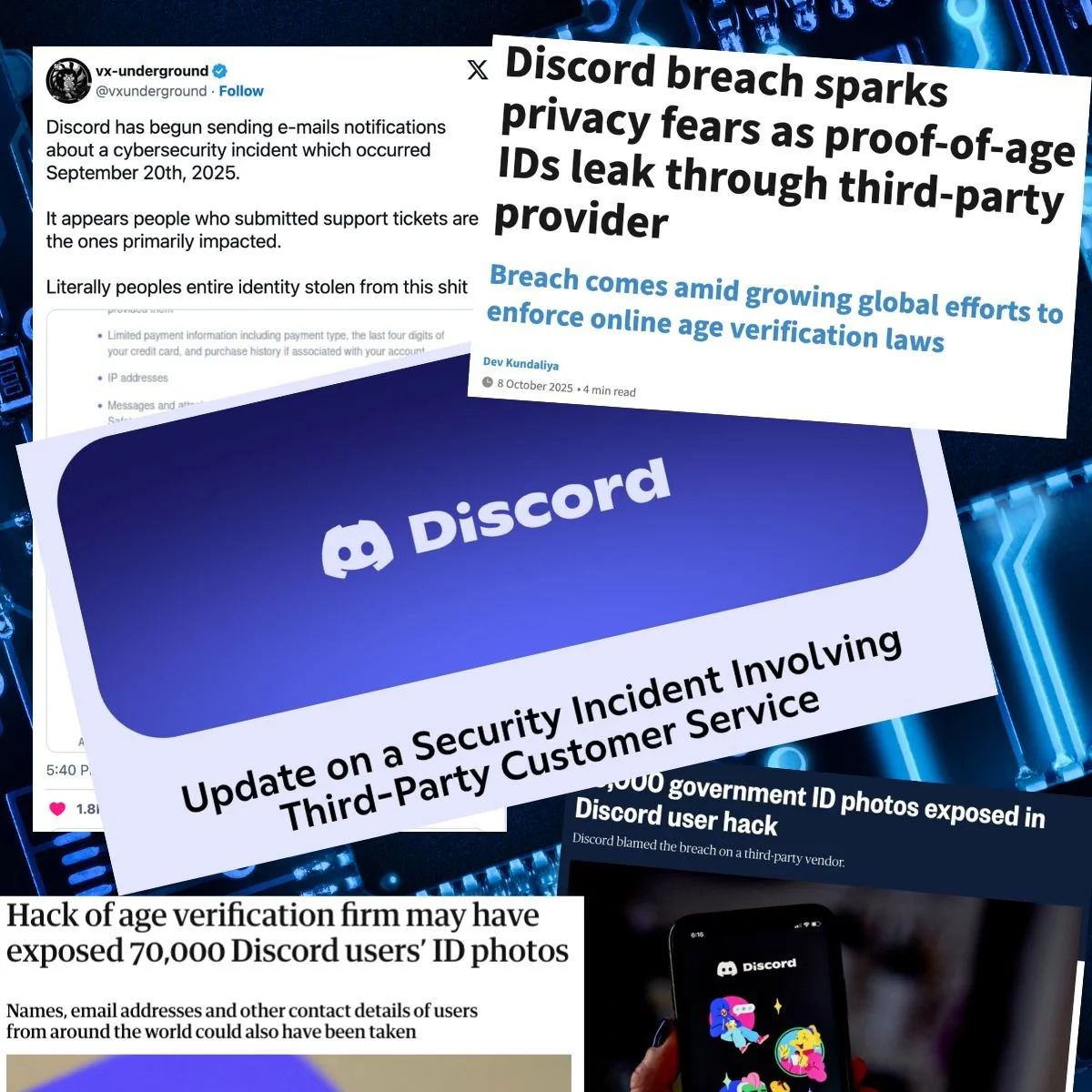

What happened? On Wednesday, my Google alerts blew up with news articles about the Discord having a data breach. Unfortunately, that’s not all that uncommon these days. Hackers were able to obtain users’ personal details, including usernames, passwords, email addresses, messages with support, payment info and – most horrifically – copies of people’s identification like drivers licenses and passports, which folks had submitted to verify their age with the platform.

As more information came out on Thursday, the story got even more interesting (at least to me) – in a myriad of ways for a myriad of reasons. Somehow this one event touches upon FIVE of my 12 governance principles, all in one incident. That’s sad, but it also illustrates how good governance relies on companies to be vigilant on multiple fronts, and it highlights the interdependencies that exist between the different elements of governance.

So here’s what my brain started thinking about when I learned about the breach:

Risk #1: Risk To Your People. When I speak about social media governance, I highlight this risk because many times, people who run Facebook ads on behalf of your company must submit identification to Meta to prove they are a real person and not a bot. This means your social media team is sharing a photo ID like a driver’s license or passport (usually in a totally not secure way). In the Discord case, most of the IDs that were accessed were likely collected because people submitted them to verify their identity and/or their age. As more and more countries look at putting age restrictions on social media, this problem is going to get bigger and a lot worse. The platforms may think it’s a great idea to store millions of people’s photo IDs, but I guarantee you they are not prepared to fully protect that data. You must protect your people who are forced to hand over this personal information to do their jobs. You cannot rely on the platforms to keep that data safe. Companies must do everything they can to help their employees be safe. I would also like to see companies offer some sort of identity protection for this particular group of employees.

Another interesting wrinkle here is that we don’t really know how many IDs were compromised. The hackers claim they accessed 2.1 million IDs. Discord says it was 70,000.

We will eventually know how many IDs were really compromised but currently we don’t because Discord chose NOT to pay the ransomware (the hackers were asking for $5 million). According to SCWorld, the hackers have reduced the demand to $3.5 million, but we still have to wait and see how this story ends. As I wrote in my post The Ethical Dilemma of Paying the Crooks, the FBI officially discourages companies from paying ransomware demands. But it’s definitely an ethical gray area, and it’s tough to argue that you shouldn’t pay the ransom if your entire operation has been crippled. The jury is definitely still out.Risk #2: Third-Party Apps: One of the often-overlooked areas of digital governance is third-party apps. People often connect their social media profiles with all kinds of tools and systems (whether officially approved or not). People often grant access to things that they shouldn’t. And it is typical that these connected apps and systems are not audited regularly or evaluated for security at all.

In the Discord instance, the hackers got in not by accessing one of Discord’s primary systems, but by hacking into their Zendesk support system instead. It was through Zendesk that they were able to access all of this sensitive information. It reminds me distinctly of the water plant that was hacked a few years back in the area of Florida that was hosting the Super Bowl. In that case, hackers got in via a third-party system which was running on – wait for it – Windows97. Yikes. I’m sure Zendesk had more current software than Windows97, but you see my point.

Companies MUST pay attention not only to the security of their primary systems but also of things that are connected to those systems. To do something really ballsy and quote myself, “it’s like locking the front door but leaving the window wide open.”

Risk #3: Risk To Your Brand: How much is your brand worth? Millions? Billions? For Discord, they need to be concerned with both mitigating the crisis and also the long-lasting effects on their reputation. As we all know, in a digital age, your reputation can be damaged in seconds. One screenshot is all it takes. One of my fellow speakers at 3M this week said “trust is earned in drops and lost in buckets.” That is so completely true. And when your consumers, or in this case users, have their faith shaken, it’s really hard to get it back. We don’t know yet if Discord will lose a chunk of users over this, or if the chunk that they do lose will be substantial enough to create true financial impact. But it’s certainly possible.

Risk #4: Compliance & regulatory risk: So first a few definitions. When I say compliance risk, I mean compliance with the rules and guidelines largely for companies in regulated industries. So, if your company is in pharma, you would have to worry about HIPAA, etc. Companies in the finance sector need to worry about complying with FINRA in their social communications (both public and private messages). When I say regulatory risk, I’m talking about laws – like GDPR in Europe, the FTC guidelines here in the United States and a myriad of ever-evolving state-specific legislation.

Companies often do a poor job of tackling this anyway. But when a crisis like this happens, it puts a big huge spotlight on the whole situation. If you are NOT following the guidelines or laws related to all these things, it’s totally going to come out in the course of managing the crisis. The net result could be one of a myriad of things: best-case scenario, your internal folks highlight the risk and take steps to mitigate it. Worst-case scenario, the regulatory body in question decides to fine you over it – and the fines in some cases can be tens of millions of dollars/Euros. Try explaining that to your CEO.

Risk #5: Human Risk: People – even the best social media pros – make mistakes. I make mistakes. We’re only human. We still don’t know how the hackers got into Zendesk. But if I had to guess, someone’s password was weak and was hacked or guessed. Or, maybe someone was duped by a deepfake and gave information to the hackers believing they were talking to the helpdesk. There are a million ways that the hackers may have gained access. But the truth is that the more companies work to make security top-of-mind for employees, the more they talk about the importance of vigilance and the more they caution people about the ramifications of not being diligent, the more likely it is that people will be have their guard up if something is fishy (or phishy). You don’t have to do this by endless video trainings or negative reinforcement. In fact, the best practice here is to find a way to make it engaging and fun. You need a carrot, not a stick. But you must recognize that the more you place emphasis on this as an organization, the more equipped your people are going to be and the less likely you are to get trapped by a “human” risk. Your employees are the front line of this fight. Don’t leave them out there with water guns.

Obviously, this isn’t the end of the story on the Discord/Zendesk hack… and maybe I’ll do a follow-up post down the road as we learn more. But if you are ignoring digital and social governance, you could be next. This is happening more and more. I am no longer struggling to find examples for my slide decks.

Be vigilant, or be next.